Disallowed URL received organic search traffic

This means that the URL in question is disallowed in robots.txt, yet received organic search traffic, per the connected Google Analytics and Google Search Console accounts.

Why is this important?

If a URL is disallowed then a specific instruction has been given to search engines to NOT crawl the page - typically this means that the website owner does not want the URL appearing in search results - but if the URL is receiving search traffic then this implies that it is indexed.

What does the Hint check?

This Hint will trigger for any internal URL which recorded some clicks in Search Analytics, and/or some visits in Google Analytics, where the URL also matches a disallow rule in robots.txt.

This data was collected from Google Search Console and Google Analytics, via API, for the connected Property/View, and for the specified date range.

Examples that trigger this Hint:

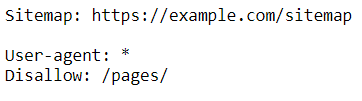

Consider the URL: https://example.com/pages/page-a, which has registered some search traffic.

The Hint would trigger for this URL if it matched a robots.txt 'disallow' rule, for example:

How do you resolve this issue?

Before jumping to any conclusions, it is worth remembering that the traffic data collected is historical, yet the crawl was done in real time - so it might be the case that this URL was previously crawlable, and is now disallowed.

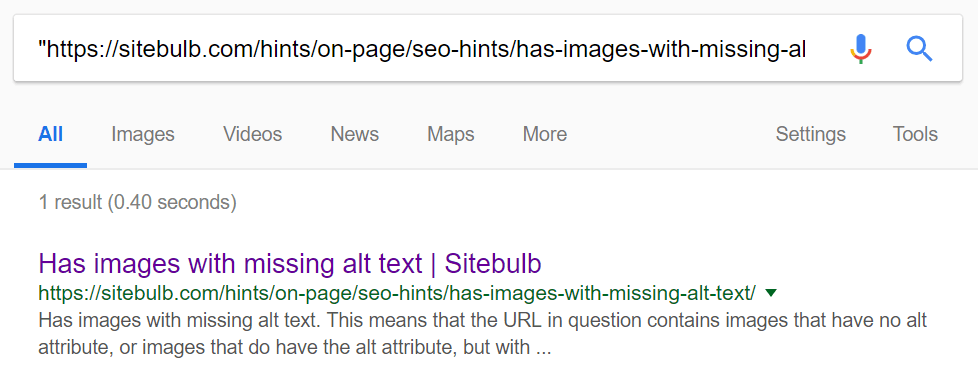

If this is the case, you would need to verify if the URL is still indexed, which you can do by searching Google for the URL in quote marks;

Depending on when the disallow rule was added, the search result may not display the title or meta description (as the disallow rule prevents Google from crawling the URL to find it).

The scenario represents a relatively common mistake that people often make. While robots.txt disallow rules will stop search engines from crawling pages, it does not affect indexing - at least in the short term. If you wish to remove a URL from the index, disallow is not the way to do it. Instead, you would need to either add a robots="noindex", to 'request' that the page be removed, or a rel="canonical", to request that another page is indexed in its stead.

After some time has gone by, and the page is no longer indexed, now would be an appropriate time to add the robots.txt disallow rule back in.

If it is not the case that the disallow rule has been added recently, then you will need to look into other signals that might encourage Google to index a disallowed URL - for example,

- Are other URLs specified the disallowed URL as a canonical?

- Is the disallowed URL included in any XML Sitemaps?