In most circumstances, your computer should be able to handle sites with less than 500,000 pages - but when you need to go larger than this, it requires a change of mindset.

This is the sort of task which, in the past, would have only been available with a very expensive cloud crawler, costing hundreds or even thousands of dollars. Achieving the same thing with a desktop crawler is perfectly possible, but you need to be aware of the limitations.

A desktop crawler like Sitebulb uses up the resources on your local machine - it uses up processing power, RAM and threads (which are used for processing specific tasks). The more you ask Sitebulb to do, the less resources will be available for you to do other tasks.

Ideally, if you are crawling a site over 500,000 pages, you would not use your computer for anything else - just leave it to crawl (perhaps overnight). If you do need to use it, then try and stick to programs that are less memory intensive - so shut down Photoshop and at least half of the Chrome tabs you didn't even need to be open in the first place.

In reality, it almost always works best when you have a spare machine you can just stick Sitebulb on to chug away on the audit, freeing up your own machine for your other work. If this isn't an option, then a cheap VPN would be fine, or you can spin up an AWS instance and run it there instead.

The main point I am trying to make here is that you can't really expect to crawl a massive site in the background and at the same time use your computer as you normally would.

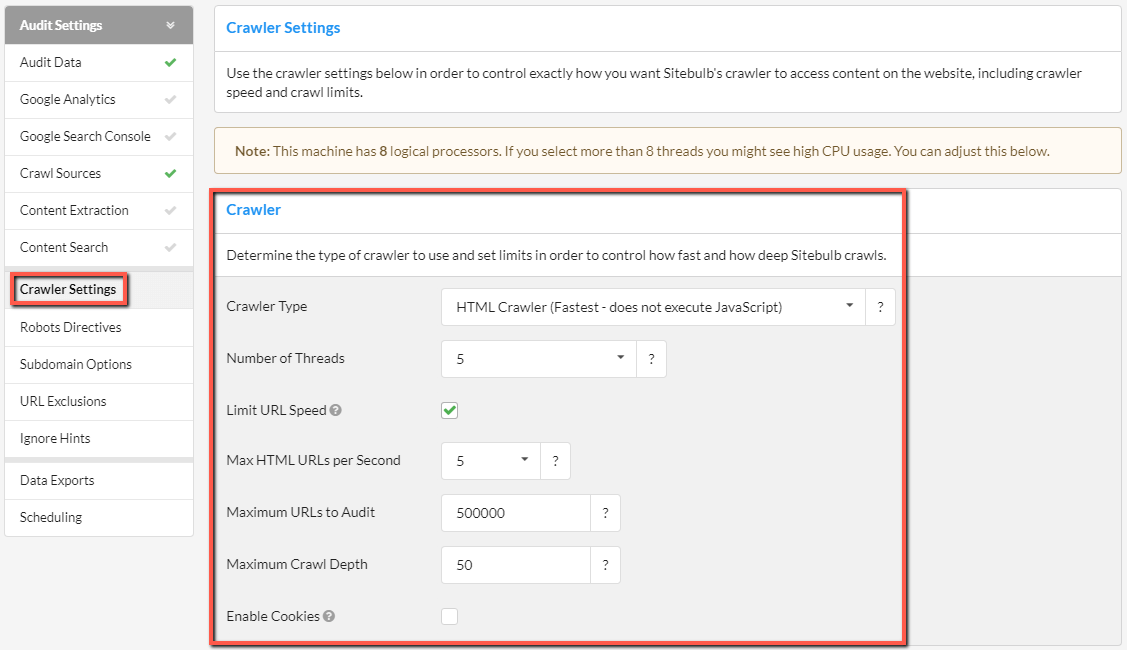

When crawling bigger sites, you typically also want to crawl faster, because the audit will take a lot longer to complete. It is straightforward to increase the speed that Sitebulb will try to crawl. Go to Crawler Settings when setting up the audit, and adjust the 'Crawler' options.

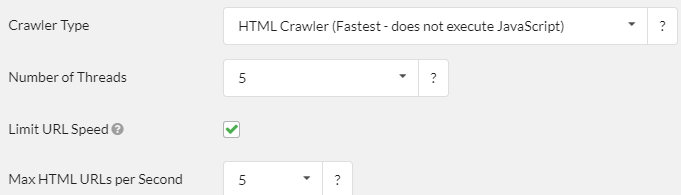

When using the HTML Crawler, increasing the number of threads is the main driver of speed increases. You can also increase the URL/second limit, or remove it completely by unticking the box. When crawling with the Chrome Crawler, the variable is simply the number of Instances you wish to use.

There are 2 main factors to consider when ramping up the speed of the crawler:

As per the above, when Sitebulb uses up computer resources, it will slow down other programs on your machine and make it more difficult for you to do other work. The vice versa is also true - if you using lots of other programs, this will slow down Sitebulb as it will have less resources it is able to use.

The other factors in play include:

We have written at length about crawling responsibly, and this is even more important when you are ratcheting the speed up.

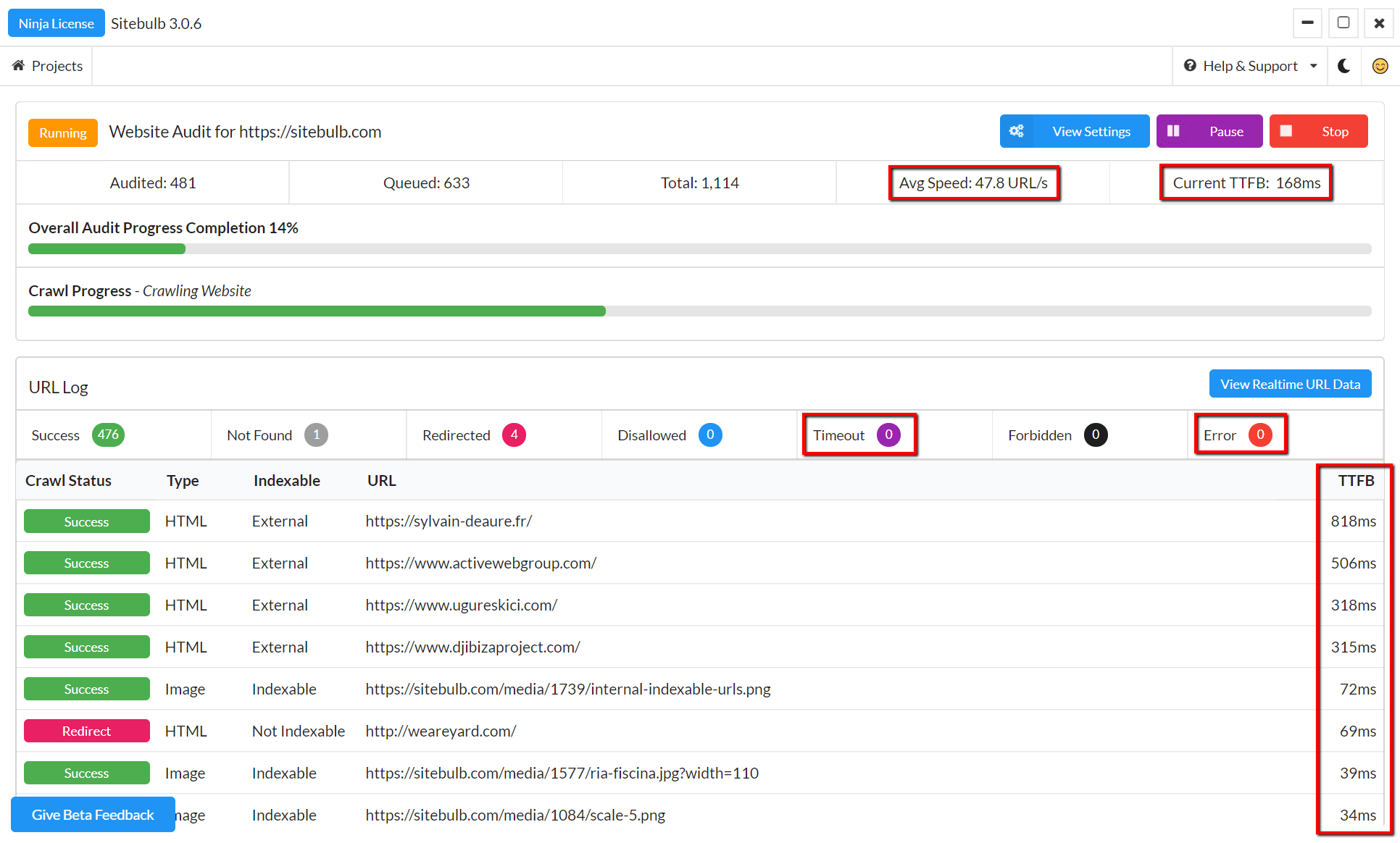

When trying to determine how fast you can push it on any given website, some trial and improvement is required. You need to become familiar with the crawl progress screen and the data it is showing you.

In particular:

You are looking for a steady, consistent, predictable audit with few errors. If this is what you are seeing, you can start to slowly increase the threads and speed limits. Do this by pausing the audit and selecting to 'Update audit settings'. Once you resume the audit, re-check the crawl progress and make sure it remains steady, and check to see if the speed has increased. Iterate through this process a number of times, incrementally increasing the threads each time, until you are comfortable with the speed.

Some other factors can also affect the speed:

The main advice on offer here is to treat the process as an experiment, and learn over time the best way to crawl each website with the machine(s) you have available.