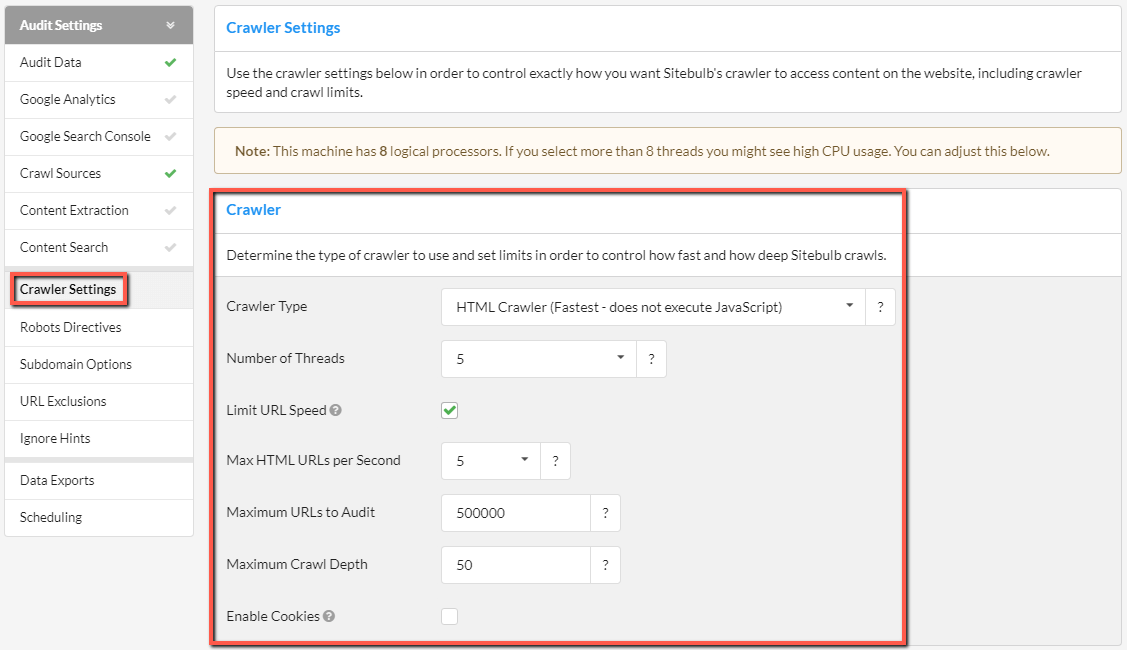

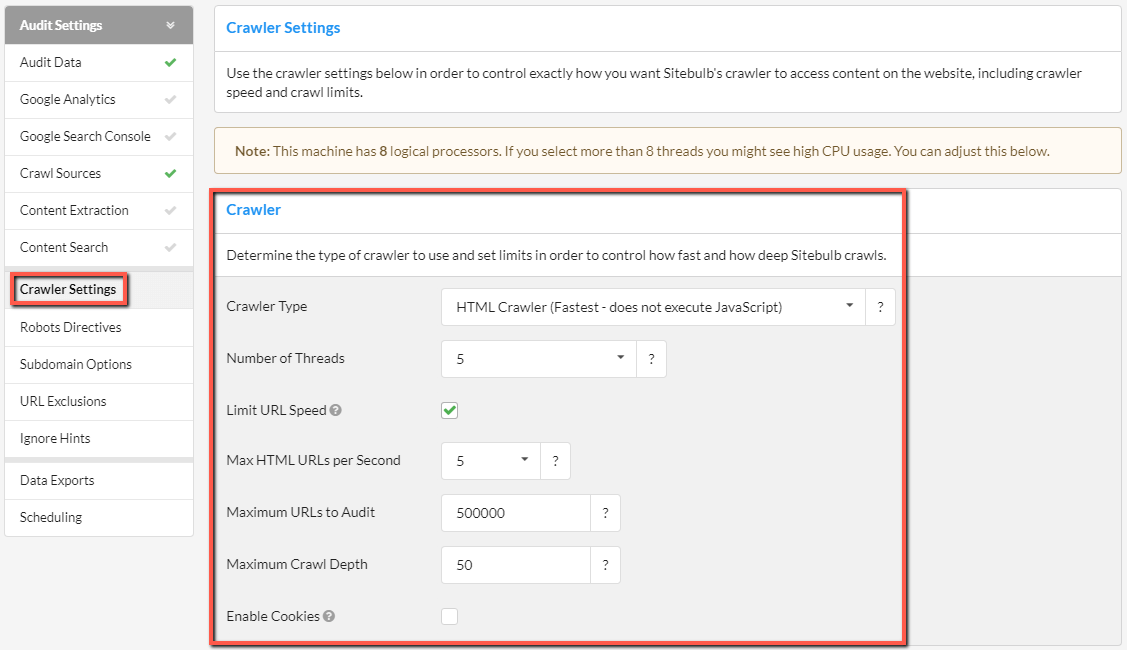

For certain websites or audits, you may wish to have more control over how Sitebulb will crawl, for example increasing the speed or adding crawl limits. You can do this via the Crawler Settings menu option on the left hand side.

These options give you various ways to control how Sitebulb crawls:

Crawler type

The Crawler Type also has two options:

- HTML Crawler - this is the default option, and will be suitable for most websites. This uses 'traditional' HTML extraction, and is a lot quicker.

- Chrome Crawler - select this option if you need to crawl a site that uses a JavaScript framework, or has a lot of content dependent on JavaScript. The Chrome Crawler will render the page using a version of headless Chrome (essentially, a Chrome browser without a user interface). In order to render, the Chrome Crawler will need to download all the page resources, so it takes a lot longer.

To help determine if a website needs to be crawled using the Chrome Crawler, you would need to do an initial audit using the Chrome Crawler (this could be a sample audit or an audit you simply stop early), and then compare the response and rendered HTML in your Sitebulb audit, to determine if important page content is dependent upon JavaScript rendering.

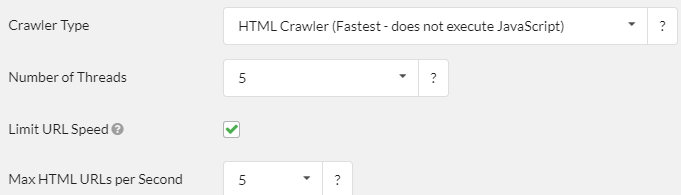

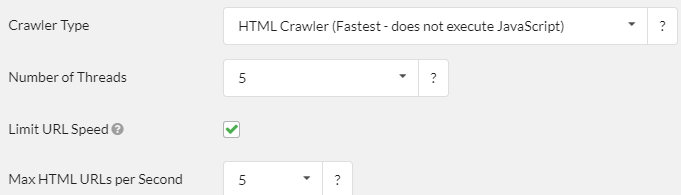

Crawl speed (HTML Crawler)

With the HTML Crawler, the way to control how fast Sitebulb will crawl is based on how many threads are used. This is fundamentally different when you use the Chrome Crawler.

These options give you ways to limit the crawler so that it crawls more quickly, or more slowly:

- Number of Threads - this controls how much CPU usage is allocated to Sitebulb. In general, the more threads you use, the faster it will go - however, this is capped by the number of logical processors (cores) you have in your machine.

- Limit URL Speed - a toggle you can use to switch on a "max URLs/second" speed cap. If switched on, Sitebulb will not crawl faster than the specified URLs/second rate.

- Max HTML URLs per Second - you can only set this value if the box above is ticked. If so, this value with provide the limit. So for instance if this is set to 5, Sitebulb will not download more than 5 HTML URLs per second.

If you wish to learn more about crawling fast, we suggest you read our documentation How to Crawl Really Fast. Alternatively, to examine the benefits of a more measured approach, then check out our article, 'How to Crawl Responsibly.'

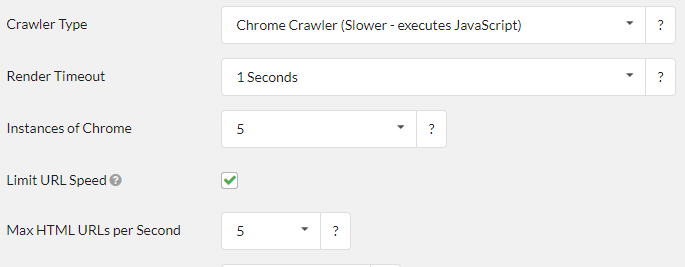

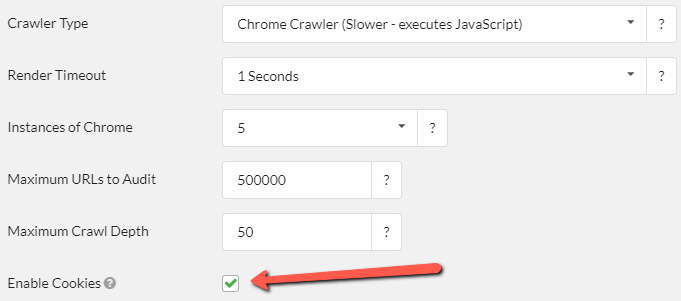

Crawl speed (Chrome Crawler)

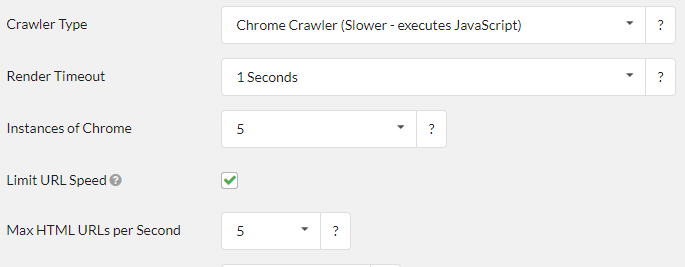

The Crawler Configuration page will look slightly different.

There is the option to select how many Chrome instances you wish to use for crawling. Again, this is dependent upon the number of logical processors you have on your machine, and pushing the value up may have adverse effects on your machine while crawling.

Adjusting these values will affect how fast Sitebulb is able to crawl:

- Render Timeout - this determines how long Sitebulb will pause to wait for content to render, before parsing the HTML. The lower value you use, the faster Sitebulb will crawl.

- Instances of Chrome - this determines how many logical processors will be used for rendering with headless Chrome, and is dependent upon the number of logical processors available on your machine (just like threads with the HTML Crawler). The higher value your use, the faster Sitebulb will crawl, within the limitations of your machine.

- Limit URL Speed - if ticked the crawler will not exceed the maximum number of URLs per second set below.

- Max HTML URLs per Seconds - in addition to instances of Chrome selected above, you can further limit the speed of the crawler by capping the number of URLs crawled per second. Lower speeds limit the number of concurrent connections, which helps prevent server slowdown for website users.

If you wish to learn more about crawling fast, we suggest you read our documentation How to Crawl Really Fast. Alternatively, to examine the benefits of a more measured approach, then check out these articles, 'How to Crawl Responsibly' and 'How to control URLs/second for chrome crawler.'

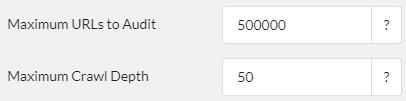

Crawler limits

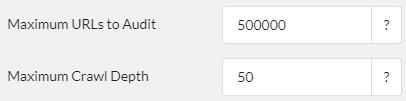

There are two ways to limit the crawler so that it crawls less (or more) pages/content:

- Maximum URLs to Audit - The total number of URLs Sitebulb will crawl. Once it hits this limit, Sitebulb will stop crawling and generate the reports. The maximum URLs you are able to crawl per website depends on your Sitebulb plan.

- Maximum Crawl Depth - The number of levels deep Sitebulb will crawl (where the homepage is 0 deep, and all URLs linked from the homepage are 1 deep, and so on). This is useful if you have extremely deep pagination that keeps spawning new pages.

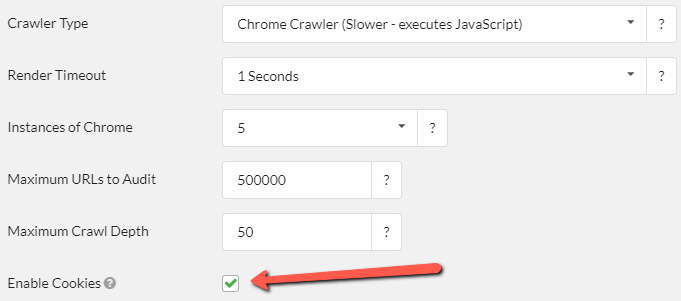

Enable cookies

The final option in the Crawler Settings section is 'Enable Cookies' - checking this option will persist cookies throughout the crawl, which is necessary for crawling some websites.